Curious People Build the Future

What LLMs can’t do—and why your next engineering hire still needs to think differently.

I still remember one of my earliest jobs in tech—not just because it was absurdly stacked with talent, but because of how we thought about hiring.

The company was called Zope Corporation. We were a Python shop, back when Python wasn’t mainstream. It was still the language of the curious. You didn’t learn Python because a bootcamp told you to. You learned it because you were exploring what was next in computing. And that’s exactly why we used it.

Our 30- or 40-person startup included much of the original PythonLabs team: Guido van Rossum, Tim Peters, Barry Warsaw, Fred Drake, and more. These were the folks shaping the language. I was a junior developer—but I was surrounded by people inventing the future of programming. And our CTO, Jim Fulton, was clear about what kind of engineer we wanted.

We didn’t hire Python developers just because we used Python.

We hired them because they were curious.

They thought critically about tools. They wanted to know what else was possible. They were already exploring the future before it arrived. That mindset was our competitive advantage.

Lately, I’ve been thinking a lot about that mindset—especially as the industry rushes to adopt LLMs.

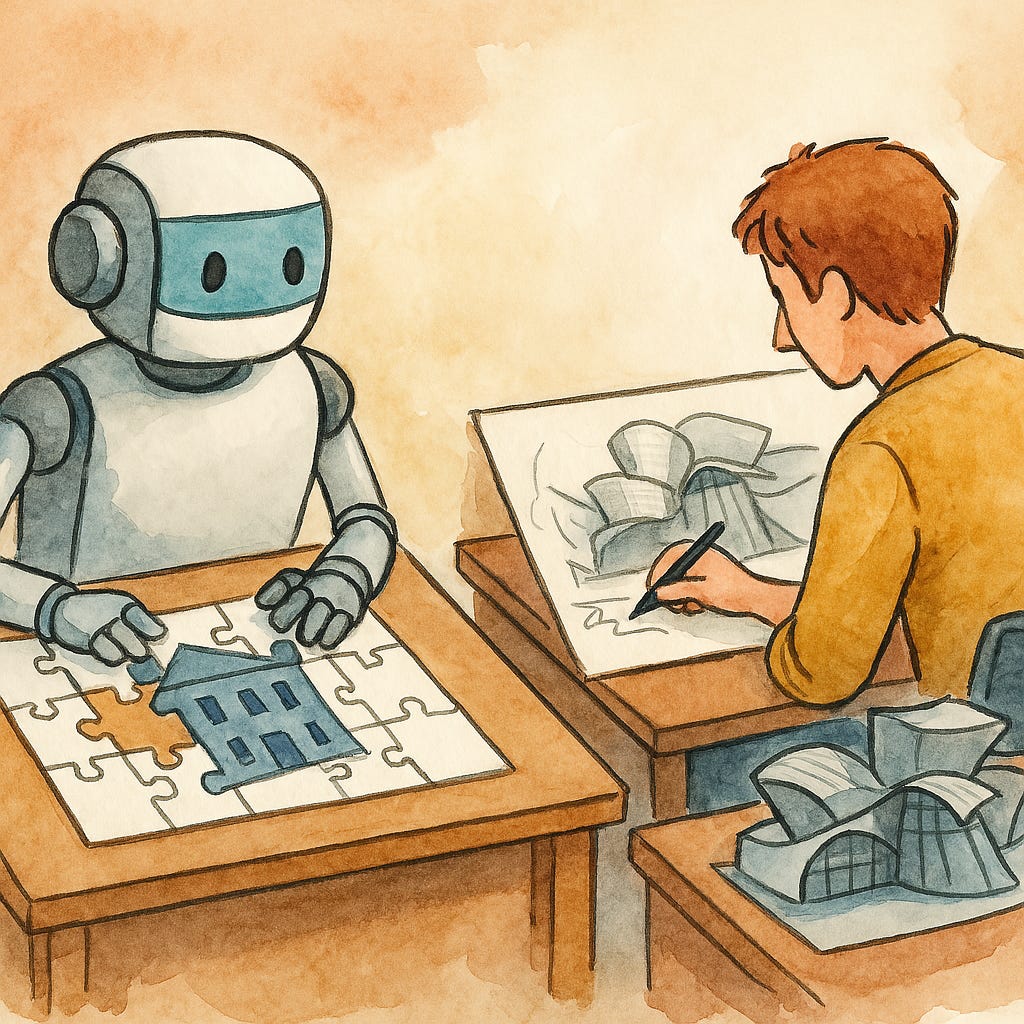

AI Can Write, But It Can’t Wonder

I’ve spent a lot of time lately working with tools like GPT, Copilot, Cursor, and Aider. The productivity gains are real. But what bothers me isn’t what the tools do. It’s what they don’t.

These models are incredibly good at democratizing knowledge. If you want to spin up a GraphQL API or scaffold a frontend, they’ll give you something that works. Sometimes, it’s even good.

But ask them to design a nuanced security architecture with cascading roles? Or to create a novel pagination strategy in Go? Or to decide whether GraphQL is even the right choice?

They flail.

Not because they’re unintelligent. Because they don’t reason. They remix. They don’t understand. They autocomplete. And they certainly don’t learn—not like we do, through experience, evolution, and integration over time.

LLMs are powerful. But they’re static. Even with retrieval-augmented generation (RAG), we’re just cramming more facts into the context window. We’re not enabling growth. We’re stacking more knowledge into a frozen frame.

So yes—LLMs are excellent at accelerating the known.

But they’re poor companions for exploring the unknown.

What Happens to New Languages?

Here’s the part that really worries me.

If I were inventing a new language or paradigm today, I’d be concerned. Unless I have the resources to fine-tune an LLM, my innovation is invisible to the dominant tools developers now rely on.

I’ve seen this firsthand.

In TypeScript, the LLM and I move fluently. In Go, I can ship, but it’s not second nature. When I ask an LLM for help in Go, the depth thins. The model avoids generics. It leans into older patterns. It misses the modern idioms.

And if I’m trying to build something truly new? It’s barely any help at all.

The model can’t guide me because nobody has guided it. It only knows what it’s been taught.

And that’s a problem.

We risk ossifying the status quo—not because it’s better, but because it’s overrepresented in the data. LLMs privilege popularity. That’s not a technical failing. It’s a community risk—unless we stay intentional.

The Return of the Curious Engineer

That brings me back to Zope.

We didn’t know it at the time, but we were preparing for a future where curiosity would matter more than credentials. That future is here.

Yes, we’ll still hire engineers who can glue standard components together. That work matters. AI tools will help them go faster.

But every business that scales eventually hits problems that aren’t in the training data.

Problems that demand invention.

Problems that require people who wonder.

When we hit those walls, we need engineers who do more than prompt.

We need engineers who ask better questions than an LLM ever could.

The Software Boom Isn’t Slowing Down

Some say LLMs will reduce the need for developers. I think they’ll increase it.

This is Jevons paradox in action: when something becomes cheaper or easier to use, demand for it increases. As AI makes software faster to write, we’ll build more of it—more systems, more tools, more ideas turned into code.

So yes, we’ll need more people. But not just any people.

We’ll need developers who understand the business deeply, who can wield AI tools with confidence and care.

And we’ll need developers who are curious. Who aren’t satisfied with remixing what exists. Who are already thinking about what’s next.

Closing Thoughts

We’re not done inventing.

If we want to keep pushing the boundaries of what’s possible in software, we can’t just optimize for productivity. We have to keep hiring for curiosity.

So if you’re leading an R&D team right now, ask yourself:

Are you hiring people who can follow the path?

Or people who can build the next one?

Because LLMs can follow. But only the curious still build the future.